Applications of artificial intelligence (AI) to health care took a major step forward with two noteworthy events in February in the cardiovascular space.

Applications of artificial intelligence (AI) to health care took a major step forward with two noteworthy events in February in the cardiovascular space.

In the first case, the U.S. Food and Drug Administration (FDA) approved the marketing of a clinical decision support tool designed to flag signs of a stroke on computed tomography (CT) images to a neurovascular specialist, thus getting appropriate treatment to the patient sooner.

Viz.AI’s software uses a form of AI to analyze CT images of the brain and detect suspected large vessel blockages. If a blockage is spotted, the Viz.AI Contact app sends a text alert to the specialist, who still needs to review the images on a clinical workstation before determining treatment. To support the software’s approval, Viz.AI submitted a retrospective study that assessed the performance of its new AI program in detecting large vessel blockages in comparison with two trained neuroradiologists’ analysis of 300 CT images. This real-world evidence was used by the FDA to conclude that Viz.AI Contact could notify a neurovascular specialist of problems sooner than a physician could in cases where a blockage was suspected. Time is of the essence in diagnosing stroke, and so this new AI-based triage method is a step forward in bringing better care to patients.

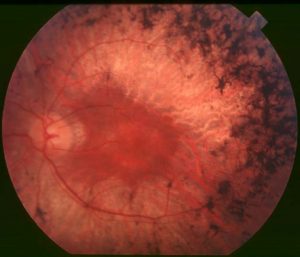

Google, its health technology subsidiary, Verily, and their Google Brain research group also revealed a significant advance in the application of AI to assess risk of stroke and heart attack. On February 19, they published research in Nature Biomedical Engineering on the use of a convolutional neural network, an AI-based computational model that excels in analyzing images, to predict a patient’s risk of stroke or heart attack from a retinal photo.

Observing, analyzing, and quantifying disease associations from medical images is difficult because of the wide variety of features, patterns, colors, values, and shapes that may be present in such images. The Google Brain researchers used deep learning models trained on data from 284,335 patients and validated on two independent datasets from 12,026 and 999 patients. From even these limited patient datasets, the researchers were able to predict cardiovascular risk factors only from retinal images including each patient’s age (within 3.26 years), gender (97% accuracy), whether they were a smoker (71%), and blood pressure (within 11.23 mmHg margin of error). The researchers discovered that their trained model could predict a patient’s risk of stroke or heart attack over the next five years approximately 70% of the time. The deep learning models used anatomical features, such as variations in optic disc or blood vessels to generate their predictions.

Mainstream cardiovascular risk calculations are also based on similar multiple factors (blood pressure, BMI, glucose and cholesterol levels, etc.) to generate disease risk with similar accuracy. However, the data required to calculate the 10-year risk is available for fewer than 30% of patients.

This is Google’s second application of AI and retinal images to improving health diagnoses. In November 2016, they announced results of a study on the use of such deep learning methods for the early detection of diabetic retinopathy, which could potentially improve care for the 415 million diabetics worldwide.

While Google’s approach is not yet advanced enough for real-world use, the researchers believe their computational models will become even more accurate with the analysis of larger patient data sets. With such further development, this approach could help physicians more quickly identify patients’ health risks at lower cost from a procedure now commonly used to detect eye diseases.